(Comic via http://xkcd.com/552/)

I have had an interesting fortnight in my role as a school leader and Research-lead. In this job, you get to share a lot of teacher training materials and the like, coupled, or most often, decoupled from the evidence. In just the last couple of weeks I have been repeatedly ‘exposed’ to popular zombie edu-theories that simply won’t go die. Discredited ideas keep bouncing back, recast and relabeled for the promise of a new generation of hard pressed teachers.

I’ve had the ubiquitous learning styles foisted into my inbox. The crumbling edifice that is the ‘learning pyramid’, or cone, or whatever it is branded as. I have seen a fist full of dubious GCSE programmes that proclaim that their evidence will secure the GCSEs of your students’ dreams. Sadly, I really could go on and on.

There is no easy antidote. Working with experts like Professor Rob Coe and Stuart Kime in the RISE project helps. Reading excellent blogs like this one from Nick Rose certainly helps too. Reading the newly created Edudatalab was a boon in this regard. Networks like ResearchEd and organizations like the Institute of Effective Education provide ballast to still the ship against the rising tide of bullcrap.

What we eventually need is a workforce of teachers who are critical consumers of research evidence and powerfully evidence-informed.

Now, I have a huge amount to learn about research evidence, but one of the turning points in my understanding was when I grasped the difference between correlation and causation (a threshold concept for research evidence):

“Correlation is a statistical measure (expressed as a number) that describes the size and direction of a relationship between two or more variables. A correlation between variables, however, does not automatically mean that the change in one variable is the cause of the change in the values of the other variable.

Causation indicates that one event is the result of the occurrence of the other event; i.e. there is a causal relationship between the two events. This is also referred to as cause and effect.

Theoretically, the difference between the two types of relationships are easy to identify — an action or occurrence can cause another (e.g. smoking causes an increase in the risk of developing lung cancer), or it can correlate with another (e.g. smoking is correlated with alcoholism, but it does not cause alcoholism). In practice, however, it remains difficult to clearly establish cause and effect, compared with establishing correlation.” (Source: Australian Bureau of Statistics)

This is of course of crucial important in schools. We are constantly being sold silver bullets whose evidence is based on loose correlation (or worse) and nothing like causation. Fundamentally, we must move toward better evaluating what we do. We can ask the question: when do we attempt to put a control group and a treatment group in place for our latest innovations? To find evidence of causation, which is obviously very tricky, it requires decent controls being in place and a transparent statistical model that doesn’t fiddle the numbers to dredge up a positive result.

Most ‘evidence’ in schools, and education more widely, fails this test.

The debate about evidence and what has value is now part of the educational landscape. The evidence of a randomised controlled trial is matched up against political ideologies and personal prejudices at every step. We are forced to mediate a minefield of information. Teachers don’t know what to believe and therefore they stop listening.

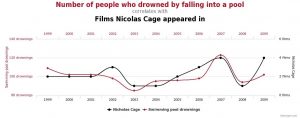

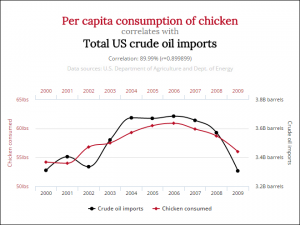

Of course, schools ourselves are guilty of this basic failing when we analyze our evidence. In our punitive accountability model we are not encouraged to honestly evaluate our interventions and their impact. We work backwards: we spent money on X, results improved generally = X caused the improvement and is worth the money. The perils of this lazy correlating pattern is brilliantly exposed by the website by Tyler Vigen aptly entitled ‘Spurious Correlations‘ (thanks Stuart Kime for sharing this gem). Take a look at these two graphs – as they’re graphs, we of course give them credence:

And there is this irrefutable evidence too!

These examples are comic, but the isn’t a quantum leap to our estimations when we evaluate school spending and such like. We buy shiny new tablets, or we create a brilliant brain friendly programme, and – hey presto – students do better. Our new thing is the thing, of course! School leaders and teachers can sink their heart and their next promotion into such interventions – there are potent reasons not to evaluate well and properly seek out causation and not dubious correlation. There are issues with control groups, or the efficacy of trials, but we should approach these head on in the pursuit of better evidence.

When presented with evidence we should question the correlation and causation. When setting up evaluations of our own we need to be mindful of this too. Setting up a new time-consuming intervention, that costs teacher time and students’ curriculum time, must be evaluated better if we really want to go some way to having robust evidence. We all have a long way to go.

Now, I’m off to watch a Nicholas Cage film and eat some chicken!

Comments